The Issue

Imagine a scene in a video game with 15,000 perfectly reflective objects. Let's, say all these objects are moving relative to each other (we'll assume no overhead for movement since that is outside the scope of this blog). There are two main approaches to rendering these reflections. Firstly, one might think to use ray-tracing. It is as simple as clicking a button in most rendering engines that support it, but in those that do not, adding support for ray-tracing is a quite daunting and complex task. Additionally, even at it's most efficient, ray-tracing is still extremely demanding. This is why we needed decades of optimization and tailor made hardware for it to be commercially viable. If you are looking to support a platform without ray-tracing capabilities, you can either use screen-space reflections, which can only reflect objects that are on the screen, or you can use environment mapping. Foundations of Game Engine Development, Volume 2 is a great resource for more detail on what environment mapping is and how it is implemented. For the sake of brevity, we will not go into depth on environment mapping.

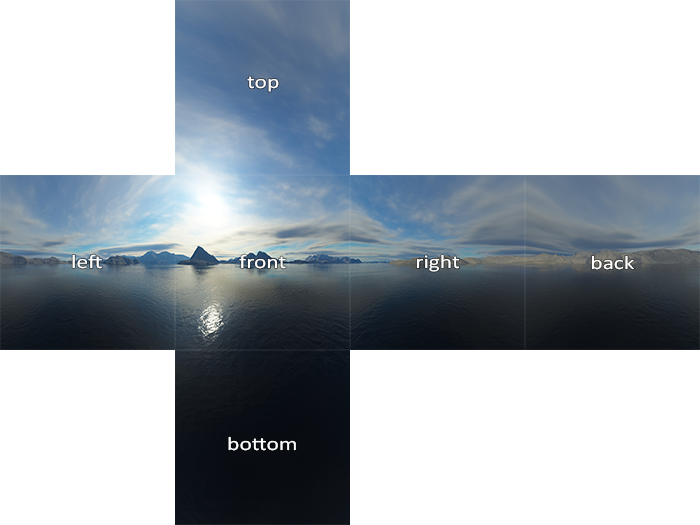

To put the process simply, with environment mapping, a 360 view of the environment is rendered, from the perspective of the object receiving reflections, onto a texture called an environment map. This texture is then mapped onto the object in a way that accounts for the direction of the surface and the direction of the camera to create the illusion of realistic reflections. An environment map is rendered by rendering six, 90 degree renders of the environment with each camera at the same point looking down the positive and negative directions. These 6 renders are then stitched onto a cube texture.

Image from LearnOpenGL.com

There are two important things to note about this technique. The first is that this technique requires you to render 6 frames for each object receiving reflections. For this reason, environment maps are typically used sparingly, rendered at low resolutions such as 256x256, and/or updated at an independent frame-rate to conserve resources. The second is that the math that puts the environment map onto the object assumes that the point of reflection is the same as the point that the environment map was rendered at (which we'll refer to as the probe point). Put simply, it assumes that the surface of the object is at the center of the object.

This issue, unfortunately, is inherent to the nature of the technique, since the cameras that render the environment map are only rendering from one point, and thus, can only represent the reflections from that one point. The way to minimize this artifact is to reduce the distance from the surface to the probe point. This is typically done by using one probe per object (or even multiple for a large, reflective object).

If we now think back to our example of 15,000 reflecting objects, we quickly see that using this technique will not work. If we have one environment map per object, that's 90,001 frames that we have to render before we can update the screen! Even if the environment map were rendered at 32x32, it would still be far beyond the capabilities of modern systems.

A Solution

In computer graphics, Level of Detail (LOD) is the technique of using alternate meshes with fewer faces for batches which cover a smaller area on the screen. More generally, LOD is the technique of rendering objects which are less prominent to the observer with lower fidelity, or rendering accuracy. With environment mapping, the main thing that sets it apart from perfectly realistic reflections is the aforementioned assumption regarding the point of reflection. So, we know that the farther away the surface is from the probe point, the lower the fidelity. We also know that the farther away a surface is from the camera, the smaller the surface will appear (thanks to the perspective divide), and thus, the lower the fidelity can be. So, if the relation of the position of the surface and the camera matches the relation of the position of the surface and the position of the probe point, we see that, by transitive relation, we can simply put the probe point at the camera.

If we do this, then the accuracy of a reflection increases as the reflection point comes closer to the camera and as the object becomes more prominent. This gives us the ability to render visually pleasing reflections for an entire scene with just one probe.

The New Issue

But, there's a big issue. Since we put the reflection probe at the camera, the reflection probe moves with the camera. This is the concept that makes this as powerful as it is, but this also causes the reflections to appear to move. A reflection probe is typically static in relation to a given object in the scene, but with this technique, the reflection probe moves in relation to the object. This makes the reflections appear to move on the surface twice as fast as they should. This issue is negligible for slow movement, but becomes very apparent for fast movement.

A Solution

If we wanted to smoothly transition from one camera position to another, we could move the camera from point A to point B, or we could fade from the view at point A to the view at point B. Each approach has it's specific use cases. In our case, we're wanting to smoothly maintain a probe at the camera as the camera moves and we're moving the probe to achieve this. It is this appearance of movement that we want to avoid. So, by instead fading from the environment map at point A to the environment map at point B, we can keep the environment map at the camera without the appearance of movement. But, we must be careful! The faster this transition takes place, the more likely the viewer is to perceive movement, but the slower this transition happens, the less closely the probe will follow the camera. Let's look at how this could be simply implemented.

If we define a radius R, we can keep our probe points within R radius of the camera. We can do this by checking if the squared distance from each probe and the camera is greater than R² and if so, moving said probe to a point on R radius of the camera. Then, during the rendering, we can blend between probes based on their distance from the camera. Increasing R will reduce the accuracy of close-up reflections and will reduce the movement artifact. Increasing R will increase the accuracy of close-up reflections and will increase the movement artifact.

In practice, we find that if we use enough probes, the result of blending between probes based on distance becomes visually indistinguishable from the result of blending between probes with equal weights. For most applications, "enough" is two or three. Additionally, in practice the result of picking a point on the radius R is visually indistinguishable from the visual result of picking a random point within a square of radius R. In applications in which the camera primarily moves on the horizontal plane, it is acceptable to only resample the horizontal coordinates of the probe. Below is an example of this system implemented in the free game engine Unity3D (this example does not blend between probes for the sake of brevity. This could be implemented quite simply in Unity's Shader Graph editor).

This code takes in a reference to an array of probes, the position of the camera, the radius to keep the probes within, and the interval between probe updating. It then checks to see if the current frame is one that the probes should be updated on. If so, it runs through all the probes and checks if their distance from the camera is greater than it should be. If it is, it puts the probe at a random x-z coordinate within a range of (-radius, radius) and sets the y coordinate to match the camera. It then re-renders the environment map regardless of whether or not it was moved. This technique can render reflections for thousands of objects with comparable quality to standard environment mapping without a noticeable difference in performance compared to rendering reflections on three objects with standard techniques.

Conclusion

I would like to conclude by summarizing the implementation of this technique, it's pros and cons, and how I found this technique.

To implement this technique, one must render a number of environment maps at certain positions and use an even blend of these environment maps for all specular reflections in the scene. These positions are checked at a fixed frequency to see if they are greater than a certain distance from the camera and moved to a random point within said distance from the camera if they are not.

This technique is powerful, but only in certain situations. This technique requires you to render x high resolution environment map, with x being at least two. So, in a situation in which reflections can be baked or can be done with two or fewer environment maps, this technique becomes impractical. This technique is best for scenes which have a high quantity of reflective objects in a dynamic environment or for scenes which have more than two dynamically placed reflective objects. An example would be a game like Trailmakers, where there are many reflective objects that can be placed in arbitrary locations in an open world setting.

I found this technique in working on an open-world, sandbox game that I am currently developing in Unity3D. In this game, I wanted realistic reflections on the cubes that you can place in the world. So, my instinct was, of course, to add a reflection probe to the scene. But, I had a question: "Where do I put this reflection probe?"

I think of a reflection probe as 6 extra frames, or 1/7th the frame-rate (even though that's not accurate), so it didn't even cross my mind to add multiple reflection probes. I also think of the world as being centered around the player (both in graphics and in game design). So, I knew that I wanted the probe to follow something that is always as close as possible to objects near the camera. So, naturally, I put it on the camera. This felt very wrong, since the camera is the destination of a reflection, not the source, but when I saw how beautiful the water was, all my questions evaporated and were replaced with ego.

I then took a step back (literally) and looked at the sides of the blocks I had placed, and saw the issue with the movement. It felt like the classic "If it's to good to be true, it probably is" rule.

While I was eating lunch, I had thought about a Minecraft clone I had made and how the terrain worked. Basically, it moved chunks that were too far away from the player to the opposite side of the acceptable area for chunks and regenerated the chunk, creating a seamless, infinite terrain. I had thought about how if you zoom really far out, it looked like the terrain was moving with the player. I though "Maybe I could use multiple probes and only move them when they get too far away."

At first, I figured that this would "technically" work, but would be demanding and would look very choppy, but as I thought more about it, I realized that it would be very easy to test, just to be sure. So, I wrote up the code, and when i saw the reflections, I was floored! I couldn't tell that there were jumps in the reflections if I tried! But... I was down from 120FPS to 15FPS. I quickly realized that i forgot to copy the optimized setting for the probe when I created the new ones, so I went from 1024x1024 to 512x512, from 5 probes to 3, and from updating every frame to updating every 4 frames. It then ran at a solid 60FPS with over a hundred reflective objects. More over, it ran at such a similar frame-rate with no reflective objects, that I could not detect where the objects were removed by looking at the FPS graph. It was then that I decided to write this blog.

Written by Drake Rochelle.

.png)

Comments

Post a Comment